VFL-82-JP

Bleedin' Orange...

- Joined

- Jan 17, 2015

- Messages

- 20,448

- Likes

- 56,129

WARNING: LONG. Folks with ADD or who do not enjoy reading, you might want to sit this one out.

What's the right answer when it comes to "coaching churn"? You know, that search for a new championship-caliber head coach the most successful schools go through from time to time? What's the best frequency for trying coaches out? One every two years? Four? Six? How does it look for the winningest programs?

That question, or family of questions, was what got me started on some new research. I wanted to find the answers. Or more accurately, I wanted to find out if there are discernable right answers. Because there may not be. A wide variety of approaches and frequencies of coaching churn may provide equally good results. Let's find out.

Now, here's how I learned it.

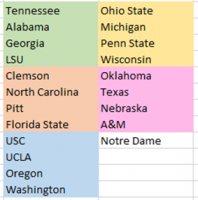

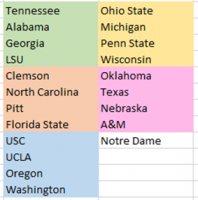

First, I selected 21 top-tier teams. I picked 4 of the best programs from each Power 5 conference (took a little liberty with what the Big 12 includes, since they bled off some of their most successful programs in the past decade or two), plus Notre Dame. That initially looked like:

Next, I set a start and end date for the research. Chose 1950 for the start (first year with both the AP and Coaches Poll, and late enough in football history that no amateur head coaches remained), and 2019, obviously, for the end date. That yielded exactly 70 seasons, a nice easy number to work with.

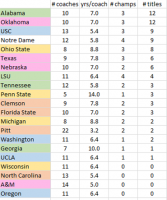

Then, time for research. I gathered three metrics for each program: how many head coaches they went through over the 70 years, how many of those coaches were national title winners (whether once or more than once), and how many national championships total the program won.

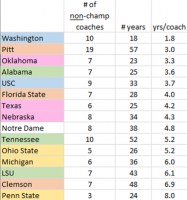

That came out looking like this (sorted by # of championships):

And almost immediately, interesting facts started to jump out of the data:

(1) Could take Wisconsin, North Carolina, A&M, and Oregon off the list; they couldn't teach us anything about finding championship caliber coaches. For that matter, could remove Georgia and UCLA as well, since 1 single success doesn't mean much. That brought the working group down to 15 schools.

(2) The team with the fewest coaches over those 70 years was Penn State, at 5. The average PSU coach lasted 14 years. Naturally, you and I know that is skewed by Paterno's 46-year tenure. Still, it's an interesting bookend. The other bookend of that stat? Pitt, with a whopping 22 head coaches. That's a new coach every 3.2 years. Of course, we (Tennessee) are partly responsible for Pitt's lack of coaching longevity, as we stole away one of their two championship-caliber coaches, Johnny Majors, right after he won them the first of the two listed titles. A&M would steal Jackie Sherrill from them in a similar way not long after their 1980 title. Isn't it curious that the teams with the greatest and shortest coaching longevity are both from the same state?

(3) The teams with the most championship-caliber coaches weren't exactly the same as those with the most championships. LSU and Notre Dame each have had 4 championship coaches in the past 70 years (Leahy, Parseghian, Devine and Holtz for ND; Dietzel, Saban, Miles, and Orgeron for LSU), but LSU won only 4 titles with those gentlemen (ND was more successful, pulling in 9). The teams with the most titles, Bama and Oklahoma, actually did it with fewer coaches, 3 each (Bryant, Stallings, Saban; and Wilkinson, Switzer, Stoops). I was actually tempted at this point of the research to collapse all my study down to those two programs, since they seemed to have broken the code on finding and retaining championship coaches for maximum gain. But decided to continue with the 15 programs to see what else they might yield collectively.

Okay, so here is where I realized something that some of you probably noticed almost from the start. The churn only matters when you DON'T have the championship caliber coach. So the data above isn't nearly as useful as it would be after removing the guys who won a title and tallying up the rest.

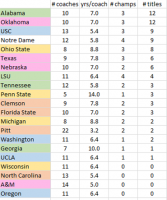

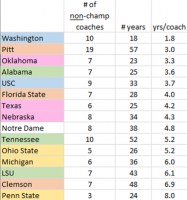

Okay, so back to the wiki pages, to come up with this:

Some new lessons emerge from this revised look:

(a) We do not want to be like Pitt or Washington, churning away every 2-3 years and getting very little in return. That's the over-energetic end of the spectrum.

(b) We also do not want to be at the Clemson / Penn State end of the scale, with a habit of sitting on a coach for long time frames without anything to show for it. Keep in mind, that Penn State finding, that's AFTER taking Paterno out of the data. They simply stay with a coach for much longer than the norm.

(c) So the "right answer" seems to be in the 3 1/2 to 6 year range. Oklahoma, at 3.3, and Bama at 3.6 years, are certainly successful finding championship caliber coaches. At the other extreme, Ohio State at 5.2 years and LSU at 6.1 are also very successful finding a coach who can get to the title game. So that's the answer to the central question of the poll: 3.3 to 6.1...round it to 3-6 years. Four and a half on average. That's how long the best programs spend on each coach before they churn to another one.

(d) You might think Tennessee is at the longish end of the spectrum. But we stuck with Johnny Majors for almost 16 years, without ever getting a national title from him. Not saying we shouldn't have, Johnny is a state treasure. Just saying that emotion ruled over objective reasoning in his case. If you take Johnny out of the math, the Vols only spend 3.9 years on each coach, on average. So maybe that's one lesson that some might draw: if you care only about titles, not about things like having a Native Son everyone loves as your head coach, don't get stuck with a guy for a decade-plus; if the first national title hasn't come by then for you, it likely never will.

(e) Good people like SJT will argue we should churn faster than we already do. Faster than one every 3.9 years. Maybe as fast as every 2 to 3 years. But I think this data shows that there is such thing as going too often. I'd be interested in hearing SJT's thoughts on that particular point.

Okay, that's it. I think I've answered the initial questions, at least to my own satisfaction. How about yours? If you're still reading after all this, you certainly care about college football trends. I'd like to hear what you think.

Thanks for joining me while I slogged through these thoughts.

Go Vols!

Notes:

(a) On championships counted: unlike ESPN, I firmly believe more than just the AP poll counts in deciding who won a national title. The Coaches Poll is equally valuable, and before either of those polls existed, there were a number of useful rating systems, some of which continue to award national titles to this day. But it does get confusing to count every little computer system that some geeky economist comes up with. So the way I decided what counted was this. I started with the list of title winners on this wiki page ( College football national championships in NCAA Division I FBS - Wikipedia ) and gave credit to any team where two or more polls/ratings agreed. That eliminates the after-the-fact one-offs, like Sagarin or CFRA going back and awarding titles from decades before their systems ever existed. The only time I counted a single source as valid by itself was for the AP or UPI/USAToday.

(b) For those who still crave more on championships and how they were awarded over the decades, I found this to be a nice read:

SMQ: A brief history of college football national championship claims

What's the right answer when it comes to "coaching churn"? You know, that search for a new championship-caliber head coach the most successful schools go through from time to time? What's the best frequency for trying coaches out? One every two years? Four? Six? How does it look for the winningest programs?

That question, or family of questions, was what got me started on some new research. I wanted to find the answers. Or more accurately, I wanted to find out if there are discernable right answers. Because there may not be. A wide variety of approaches and frequencies of coaching churn may provide equally good results. Let's find out.

~ ~ ~

Okay, so bottom line up front, here's what I learned: 3.3 to 6.1 years, or about four and a half years on average, is the best frequency for churning through coaches to find a championship-winner. That's based on the successes of many of the best teams in the college game over the past seventy years.

~ ~ ~

Now, here's how I learned it.

First, I selected 21 top-tier teams. I picked 4 of the best programs from each Power 5 conference (took a little liberty with what the Big 12 includes, since they bled off some of their most successful programs in the past decade or two), plus Notre Dame. That initially looked like:

Next, I set a start and end date for the research. Chose 1950 for the start (first year with both the AP and Coaches Poll, and late enough in football history that no amateur head coaches remained), and 2019, obviously, for the end date. That yielded exactly 70 seasons, a nice easy number to work with.

Then, time for research. I gathered three metrics for each program: how many head coaches they went through over the 70 years, how many of those coaches were national title winners (whether once or more than once), and how many national championships total the program won.

That came out looking like this (sorted by # of championships):

And almost immediately, interesting facts started to jump out of the data:

(1) Could take Wisconsin, North Carolina, A&M, and Oregon off the list; they couldn't teach us anything about finding championship caliber coaches. For that matter, could remove Georgia and UCLA as well, since 1 single success doesn't mean much. That brought the working group down to 15 schools.

(2) The team with the fewest coaches over those 70 years was Penn State, at 5. The average PSU coach lasted 14 years. Naturally, you and I know that is skewed by Paterno's 46-year tenure. Still, it's an interesting bookend. The other bookend of that stat? Pitt, with a whopping 22 head coaches. That's a new coach every 3.2 years. Of course, we (Tennessee) are partly responsible for Pitt's lack of coaching longevity, as we stole away one of their two championship-caliber coaches, Johnny Majors, right after he won them the first of the two listed titles. A&M would steal Jackie Sherrill from them in a similar way not long after their 1980 title. Isn't it curious that the teams with the greatest and shortest coaching longevity are both from the same state?

(3) The teams with the most championship-caliber coaches weren't exactly the same as those with the most championships. LSU and Notre Dame each have had 4 championship coaches in the past 70 years (Leahy, Parseghian, Devine and Holtz for ND; Dietzel, Saban, Miles, and Orgeron for LSU), but LSU won only 4 titles with those gentlemen (ND was more successful, pulling in 9). The teams with the most titles, Bama and Oklahoma, actually did it with fewer coaches, 3 each (Bryant, Stallings, Saban; and Wilkinson, Switzer, Stoops). I was actually tempted at this point of the research to collapse all my study down to those two programs, since they seemed to have broken the code on finding and retaining championship coaches for maximum gain. But decided to continue with the 15 programs to see what else they might yield collectively.

Okay, so here is where I realized something that some of you probably noticed almost from the start. The churn only matters when you DON'T have the championship caliber coach. So the data above isn't nearly as useful as it would be after removing the guys who won a title and tallying up the rest.

Okay, so back to the wiki pages, to come up with this:

Some new lessons emerge from this revised look:

(a) We do not want to be like Pitt or Washington, churning away every 2-3 years and getting very little in return. That's the over-energetic end of the spectrum.

(b) We also do not want to be at the Clemson / Penn State end of the scale, with a habit of sitting on a coach for long time frames without anything to show for it. Keep in mind, that Penn State finding, that's AFTER taking Paterno out of the data. They simply stay with a coach for much longer than the norm.

(c) So the "right answer" seems to be in the 3 1/2 to 6 year range. Oklahoma, at 3.3, and Bama at 3.6 years, are certainly successful finding championship caliber coaches. At the other extreme, Ohio State at 5.2 years and LSU at 6.1 are also very successful finding a coach who can get to the title game. So that's the answer to the central question of the poll: 3.3 to 6.1...round it to 3-6 years. Four and a half on average. That's how long the best programs spend on each coach before they churn to another one.

(d) You might think Tennessee is at the longish end of the spectrum. But we stuck with Johnny Majors for almost 16 years, without ever getting a national title from him. Not saying we shouldn't have, Johnny is a state treasure. Just saying that emotion ruled over objective reasoning in his case. If you take Johnny out of the math, the Vols only spend 3.9 years on each coach, on average. So maybe that's one lesson that some might draw: if you care only about titles, not about things like having a Native Son everyone loves as your head coach, don't get stuck with a guy for a decade-plus; if the first national title hasn't come by then for you, it likely never will.

(e) Good people like SJT will argue we should churn faster than we already do. Faster than one every 3.9 years. Maybe as fast as every 2 to 3 years. But I think this data shows that there is such thing as going too often. I'd be interested in hearing SJT's thoughts on that particular point.

~ ~ ~

Okay, that's it. I think I've answered the initial questions, at least to my own satisfaction. How about yours? If you're still reading after all this, you certainly care about college football trends. I'd like to hear what you think.

Thanks for joining me while I slogged through these thoughts.

Go Vols!

Notes:

(a) On championships counted: unlike ESPN, I firmly believe more than just the AP poll counts in deciding who won a national title. The Coaches Poll is equally valuable, and before either of those polls existed, there were a number of useful rating systems, some of which continue to award national titles to this day. But it does get confusing to count every little computer system that some geeky economist comes up with. So the way I decided what counted was this. I started with the list of title winners on this wiki page ( College football national championships in NCAA Division I FBS - Wikipedia ) and gave credit to any team where two or more polls/ratings agreed. That eliminates the after-the-fact one-offs, like Sagarin or CFRA going back and awarding titles from decades before their systems ever existed. The only time I counted a single source as valid by itself was for the AP or UPI/USAToday.

(b) For those who still crave more on championships and how they were awarded over the decades, I found this to be a nice read:

SMQ: A brief history of college football national championship claims